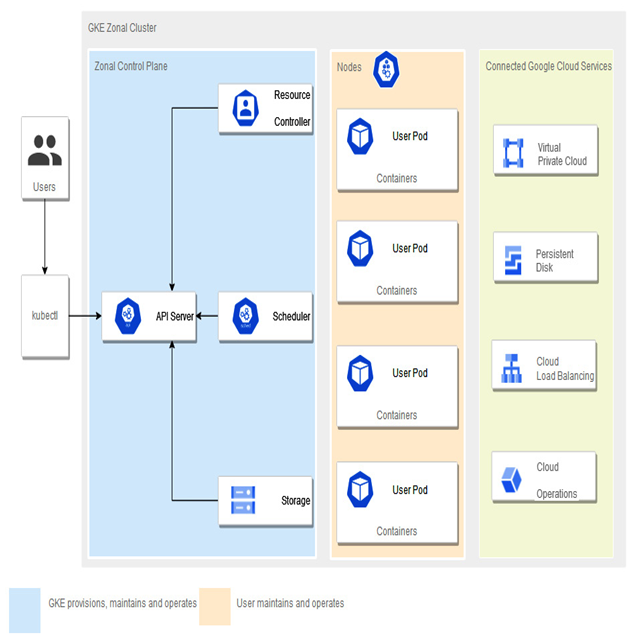

As with a pure Kubernetes architecture, a cluster is the foundation of GKE.

GKE clusters consist of one or more control planes and multiple worker machines where the workload runs, called nodes. The control plane and nodes are the main components of the container orchestration system:

Figure 5.4 – GKE standard architecture

Control plane

The control plane has many roles, such as Kubernetes API server, scheduler, and resource controller. Its life cycle is managed by GKE when the cluster is created, deleted, or upgraded.

Nodes

Cluster nodes are called worker machines because the containers we schedule to run are deployed on worker nodes. Those worker nodes are Compute Engine VM instances managed by the GKE control plane.

Each worker node is managed by the control plane, receives updates, and reports its status to the control plane. Each node runs services that make up the whole cluster. For example, runtime and Kubernetes node agent Kubelet, which communicates with the control plane, starts and stops containers on the node.

The default node machine type is e2-medium, a cost-optimized machine type with shared cores that supports up to 32 vCPUs and 128 GB of memory. The default node type can be changed during the cluster creation process.

Each node runs a specialized operating system image to run the containers. At the time of writing, the following operating system images are available:

- Container-optimized OS with containerd (cos_containerd)

- Ubuntu with containerd (ubuntu_containerd)

- Windows Long-Term Servicing Channel (LTSC) with containerd (windows_ltsc_containerd)

- Container-Optimized OS with Docker (cos)—will be unsupported as of v1.24 of GKE

- Ubuntu with Docker (ubuntu)—will be deprecated as of v1.24 of GKE

- Windows LTSC with Docker (windows_ltsc)

To view the most current list of available images, please visit the following URL: https://cloud.google.com/kubernetes-engine/docs/concepts/node-images#available_node_images.

Starting from GKE version 1.24, Docker-based images aren’t supported, and Google Cloud advises not to use them.

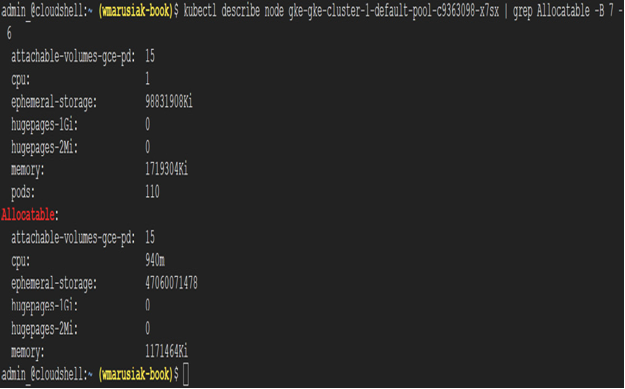

As the nodes themselves require CPU and RAM resources, it might be beneficial to check the available resources for the containers. The larger the node is, the more containers it can run. From the containers themselves, you can configure or limit their resource usage. To check available resources to run containers, we need to initiate the following command:

kubectl describe node NODE_NAME| grep Allocatable -B 7 -A 6

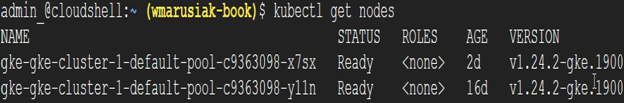

First, we need to get available nodes. This can be done by using the following command:

kubectl get nodes

The next screenshot shows the output of the previous command with details about the cluster, its version, and its age:

Figure 5.5 – Output of the kubectl get nodes command

Once we have a node name, we can check the resources available to run containers, as follows:

Figure 5.6 – Output of the command to check available resources to run containers on a node

This estimation doesn’t take into account the eviction threshold. Kubernetes allows the triggering of eviction if the available memory falls below a certain point. It can be defined as memory.available<threshold in %, or as a quantity—for example, memory.available<10% or memory.available<1Gi.

More details about eviction can be found on the Kubernetes website at the following URL: https://kubernetes.io/docs/concepts/scheduling-eviction/node-pressure-eviction/#eviction-thresholds.

Tip

The total available memory for Pods can be calculated by using the following formula:

ALLOCATABLE = CAPACITY – RESERVED – EVICTION-THRESHOLD

Details about GKE reservations can be found at the following URL: https://cloud.google.com/kubernetes-engine/docs/concepts/plan-node-sizes#memory_and_cpu_reservations.

Now, let’s learn how GKE Standard differs from GKE in Autopilot mode.