Autopilot is a relatively new product from Google Cloud—it was released in February 2021.

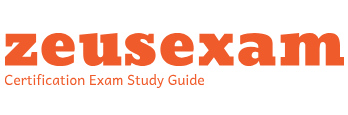

Following this announcement, GKE now offers two modes of usage: Standard and Autopilot. We just discussed Standard mode, where we can configure multiple GKE options and fine-tune it to our liking. Autopilot mode, however, aims at delivering industry best practices and eliminates all node management operations, allowing a focus on application deployment:

Figure 5.7 – GKE Autopilot architecture overview

In GKE Autopilot, there is no need to monitor the health of nodes or calculate the compute capacity of your cluster to accommodate workloads. You have the same Kubernetes experience as in Standard mode but with less operational overhead.

Google created a fantastic overview of features comparison between the two modes, and we highly encourage you to review the significant differences: https://cloud.google.com/kubernetes-engine/docs/resources/autopilot-standard-feature-comparison.

One big difference between the two modes is that Autopilot has a service-level agreement (SLA) that covers the GKE control plane (99.95%) and your Pods (99.9%), whereas GKE Standard has an SLA only for the control plane—99.5% SLA for zonal clusters and 99.95% for regional clusters. To gain insights into the GKE SLA, how various GKE deployment types influence the SLA, and which components it encompasses, please refer to the following URL: https://cloud.google.com/kubernetes-engine/sla.

While GKE Autopilot solves many operational burdens (monitoring, best practices, autoscaling, and many others), it has limitations that need to be considered when running workloads.

Some of GKE Autopilot’s limitations are listed as follows:

- Hardened configuration of Pods that provides enhanced security isolation. This means that Pods cannot run with elevated privileges, such as root access.

- Revokes permissions for utilizing highly privileged Kubernetes primitives such as privileged Pods, thereby restricting the ability to access, modify, or directly control the underlying node VM.

- Most external monitoring tools will not work due to removing necessary access. Native Google Cloud monitoring must be used in that case.

- We cannot create custom subnets. Subnets are used to divide our cluster’s network into smaller segments. In GKE Autopilot, subnets are created automatically based on the number of nodes in our cluster.

- We cannot use some Kubernetes networking features. Some Kubernetes networking features, such as NetworkPolicy and Ingress, are not supported in GKE Autopilot.

Those are just a few major limitations, and for production usage, we encourage you to visit the following link to read more: https://cloud.google.com/kubernetes-engine/docs/concepts/autopilot-overview#security-limitations.

GKE offers multiple management interfaces we can interact with. Google Cloud offers full capabilities in each of the interfaces. Upcoming parts of the chapter will guide us through both the CLI and the Cloud console experience of cluster management and configuration.

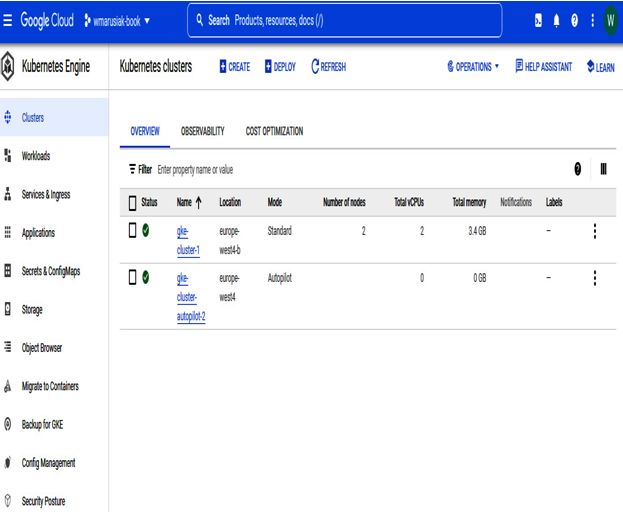

We already know Cloud Console from the previous chapter, where we worked heavily with Compute Engine and we worked with instances. The following screenshot shows two GKE clusters running in our project. The console looks familiar to GCE:

Figure 5.8 – GKE in Cloud Console

We will show you how to deploy GKE Standard and GKE Autopilot in the upcoming section.