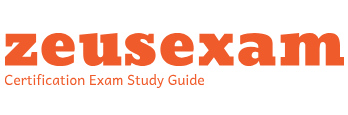

With this load balancer configuration, you set up a regional public IP address that is still available from the internet but always points to a regional backend. Users (or Compute Engine VMs with access to the internet) from any location can access such services. Still, a load balancer can only distribute traffic between instances in the same region. For high availability, instances can be deployed in multiple zones of a region.

An external network TCP/UDP load balancer distributes traffic at the TCP/UDP (Layer 4) level on any port. It works in passthrough mode, which means it preserves the client’s IP address, and the backend responses go directly to clients bypassing a load balancer:

Figure 9.39 – External network TCP/UDP load balancer examples

The preceding figure presents an example with two load balancers, one used by Service A in europe-central2 backed by an instance group in the same region and the second used by Service B in australia-southeast1 backed by an instance group in the same region. Both represent different services that are globally accessible, just served from one region. As a result, users in different locations can access both services. Users who are closer to a configured load balancer will experience lower latency.

Internal TCP/UDP load balancing

The previous sections described load balancers that balance traffic that originates from the internet. Internal TCP/UDP (Layer 4) load balancers distribute traffic originating from internal clients or internal Compute Engine instances in internal networks. It protects an internal architecture from exposure as it hides behind a load balancer’s internal IP address. It preserves the client IP (as a passthrough load balancer) and can balance on any TCP or UDP port.

You can access this load balancer from the same VPC network where it is running or from another VPC network via VPC peering or VPN. Also, it can be accessed from an on-premises location via VPN or Interconnect.

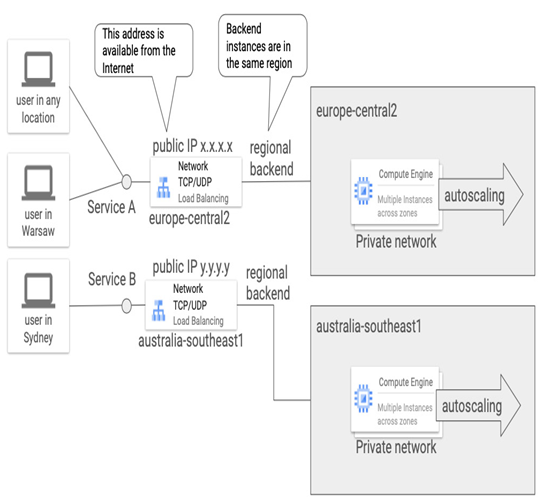

Although we call it a load balancer, there is no single box processing traffic. Instead, it is a service that’s distributed at the lower layers of Google’s software-defined networking. This provides scalability, high throughput, and low latency for balanced workloads. The following figure presents one of the use cases of an internal TCP/UDP load balancer:

Figure 9.40 – Internal TCP/UDP load balancers used behind a global external HTTP(S) load balancer

In this tiered architecture, external users connect to an application available globally via a public IP address, served by a global external HTTP(S) load balancer. Behind it, we have a web server tier that’s served from two regions. Users are directed to the closest available region. In every region, another load balancer – an internal TCP/UDP load balancer – distributes internal regional traffic from a web server tier to a database tier. The database tier is not accessible externally and consists of multiple instances deployed across zones in a region. In case of a failure in a zone, traffic is redirected to instances in another zone in the same region.

Selecting a particular load balancer depends mostly on your application architecture. Here are some examples:

- A global external HTTP (s) load balancer will be the best fit when a web-based application should be available from the internet and is expected to provide a good user experience (minimizing latency) and high availability by redirecting traffic to the closest operational regional backend

- A global external TCP/SSL load balancer should be used to allow access to an application from the internet on ports other than HTTP(S)

- An external network TCP/UDP load balancer will be a good choice for your internet-facing application if you need a client IP to be preserved or your service needs to load balance UDP traffic

- Use an internal TCP/UDP load balancer to distribute workloads in the same VPC and keep your backplane architecture hidden

Note

Check out the following link to the Google Cloud documentation on choosing the best load balancer for your workloads: https://cloud.google.com/load-balancing/docs/choosing-load-balancer.

In this chapter, we learned how to create global VPC networks for workloads that span multiple Google Cloud projects. We also explored how to connect Google Cloud with on-premises data centers. One of the most important topics we covered was how to protect workloads using firewall rules and Cloud Armor. Additionally, we delved into Google Cloud networking services such as Cloud DNS and different types of load balancers. We also learned how to use load balancers to improve the security and availability of globally available applications.

As we wrap up our discussion on networking, let’s look ahead to the next chapter, where we’ll continue to expand our knowledge by looking into the essential topic of data processing services.