When an application outgrows a single Compute Engine VM size, even of the largest type, it is time to use managed instance groups and load balancers to handle larger amounts of traffic.

Refer to Figure 4.67 in Chapter 4, where this concept was initially introduced. A managed instance group is a set of identical Compute Engine instances deployed from a template in a zone or zones in a region. Thanks to the autoscaling feature, the group can dynamically grow or shrink depending on the load. When a health check detects that one of the instances has failed, it is recreated. When combined with a load balancer, a managed instance group can work as the backend of an application. The load balancer’s role is, in this case, to distribute traffic to instances based on conditions such as CPU utilization or the number of requests.

A managed instance group is just one of the supported backend types for a load balancer in Google Cloud. Other possible backend workloads, depending on the load balancer’s type, are, among others, unmanaged instance groups (instances configured individually and managed by a user) or serverless backends such as App Engine, Cloud Run, or Cloud Functions. Also, Google Cloud Storage can function as a backend that serves static content to users. This section will look into the selected load balancer types for managed instance groups. Note that all load balancer types are managed by Google and don’t require installation, patching, and maintenance.

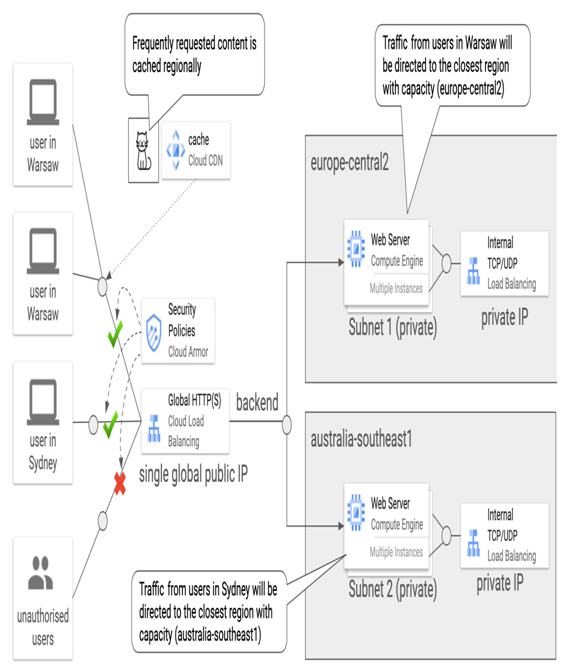

Global external HTTP(S) load balancer

A global external HTTP(S) load balancer distributes Layer 7 traffic from the internet to VMs or serverless services. It offers a single public anycast IP address that can be used across multiple backend instances in multiple regions. Requests that come from users are sent to the closest backend instances that can process them. This significantly improves the response time for globally available applications.

Because this type of load balancer faces the internet, it is often configured with Cloud Armor. Cloud Armor offers a Distributed Denial of Service (DDoS) defense service and Web Application Firewall (WAF) services. It can restrict access to an HTTP(S) load balancer closer to a source at the edge of the Google Cloud network to stop the unwanted traffic from flowing to a backend. In addition, with Cloud Armor, you can configure security policies to allow or deny traffic to a backend based on a source IP, an IP range, or the geographical location of a source client.

Also, an HTTP(S) load balancer is often paired with Cloud Content Delivery Network (Cloud CDN). Cloud CDN uses Google’s globally distributed points of presence to cache HTTPS content, providing faster delivery to users. When there is a request for specific content in a region and this content is not in a cache, it is forwarded to a load balancer and beyond for backend instances to retrieve it. Once retrieved, it is stored for future requests in the same location.

The following screenshot shows an example of a global external HTTP(S) load balancer serving content to users worldwide. It leverages Cloud Armour to deny traffic from unauthorized users and Cloud CDN to cache frequently requested content. Authorized users can access backend services via a single global public IP address. It is the load balancer’s role to direct traffic to the closest backend that can process the request. On the backend side, there are regional managed instance groups with autoscaling enabled. Once the volume of the requests reaches a certain level, another instance is deployed up to a specified maximum value. In this example, users from Warsaw will be served by the backend in europe-central2, and the backend in australia-southeast1 will receive traffic from users in Sydney:

Figure 9.34 – Global external HTTP(S) load balancer example with Cloud CDN and Cloud Armor